Fast research for a MVP

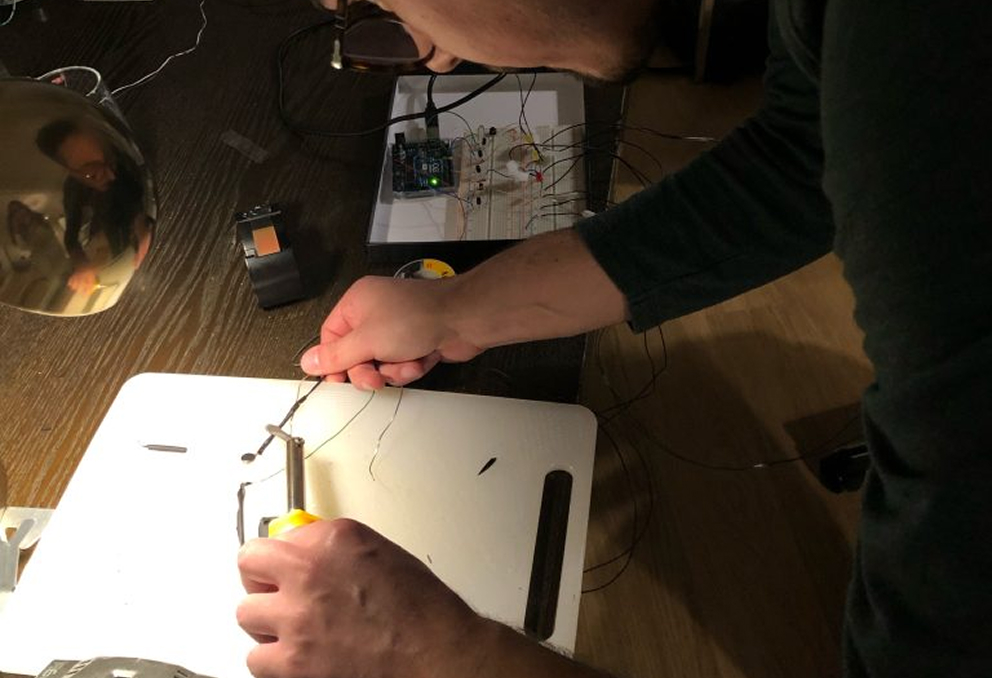

I conducted fast, focused secondary research, reviewing topics like Calm Technology, Assistive Tech, Sensory Substitution, and Car UX. I also looked for examples of in-car interfaces for deaf drivers—while rare, they helped shape the direction of my concept.

Key takeaways:

- Accessibility in car tech is still limited;

- feedback must be easy to perceive without adding cognitive load;

- The interface should stay non-intrusive so others can use the car normally.

Wireframes and respective GUI Prototype

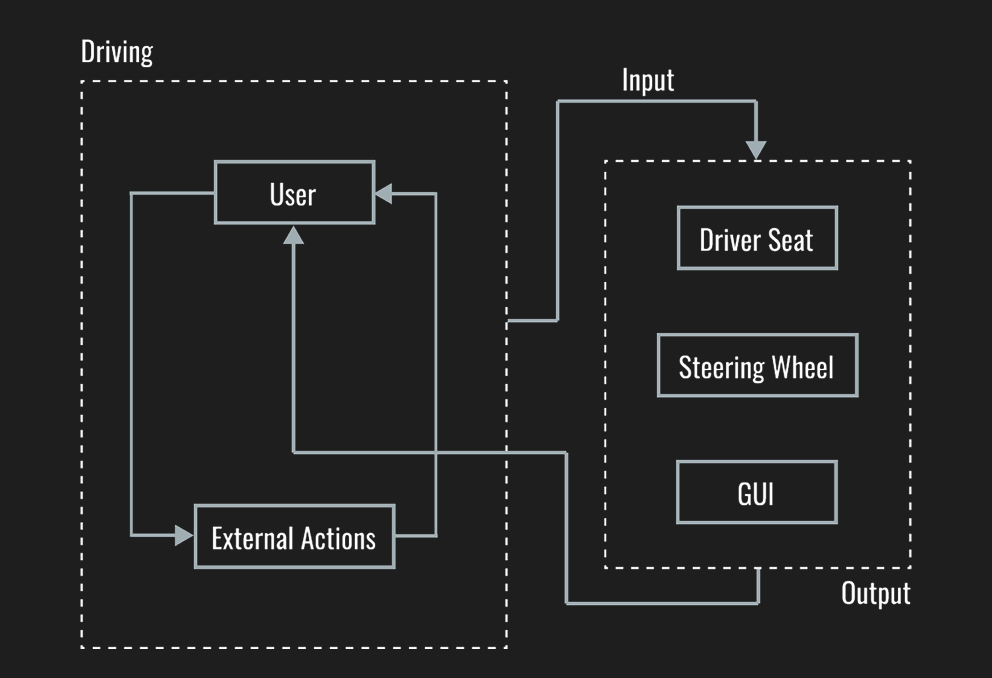

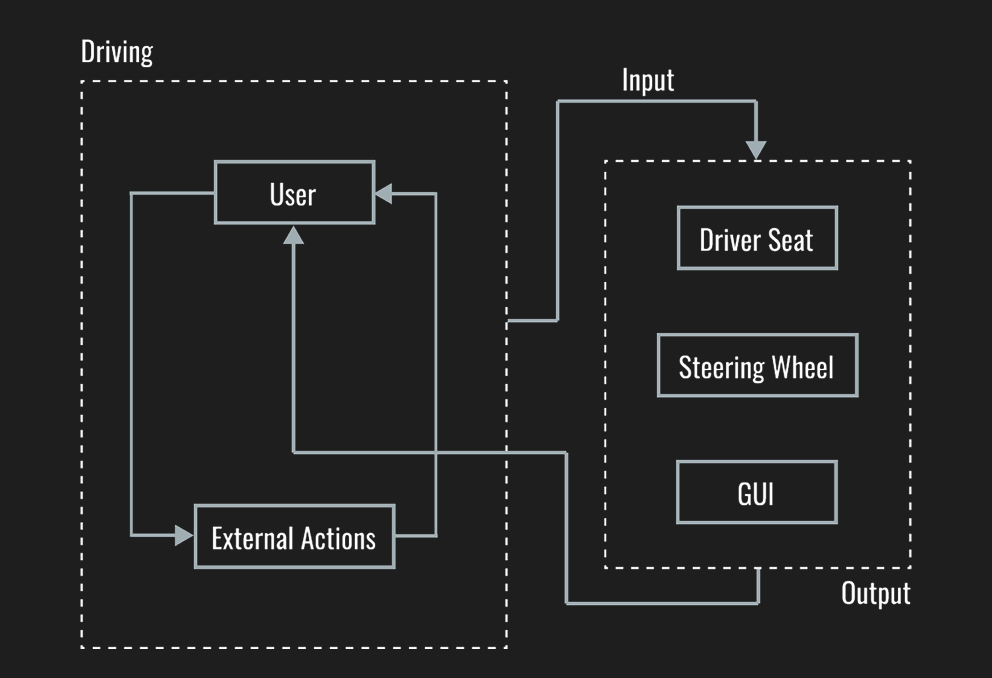

Interaction Model based on the concept of "Implicit Interactions"